Frequently asked questions

What license covers the SARUAV system?

SARUAV Ltd. grants spatially-limited licenses. This is both a legal (license conditions) and technical limitation (for each implementation, it is necessary to prepare dedicated spatial data, and the automatic detection of people works in the area specified by the customer).

Minimum license period is equal to three months, and the longest licenses are concluded for three years (county- or province-size licenses) or four years (licenses for areas larger than a single province). Free test licenses are granted for one month.

What does a license fee depend on?

There exist two types of licenses: standard (county- or province-size) and non-standard (areas greater than a province). To calculate the license fee several factors are taken into account, e.g.: license period, number of counties/provinces (for standard licenses) or the area of terrain (for non-standard licenses), number of computers on which the system is to be installed.

Each non-standard deployment requires a separate evaluation, also taking into account specific conditions of warranty and service.

How does the implementation process look like?

For each deployment, we prepare the archive file which is extracted by a client who, based on the installation manual, performs installation on a personal computer. In case of any problems with the installation process, we provide the client with support by organizing conference calls or videoconferences. Each license agreement covers one training conducted remotely.

What warranty and service do you provide?

Due to the fact that the selection and purchase of a drone and computer remain the client's responsibility, during the license period we only service software developed by SARUAV Ltd.

Service is offered along the lines of the following terms included in the license agreement:

“In the case of failure of the SARUAV system, the Licensee must notify the Licensor of this fact not later than within seven days from when it has become aware of this event. Within fourteen days from the notification receipt, the Licenser undertakes to commence the resolution of failure or other event (response time), while the Licensee must deliver within this time limit the computer hardware to the Licensee to resolve the failure, unless its scope does not require any interference with the computer hardware where the app is to be installed. Any decision within this scope is made by the Licenser after an initial analysis of the reported issue.”.

What parameters must the computer meet for the system to operate with?

The computer requirements are the following:

| minimum | recommended | |

| GPU | NVIDIA Geforce GTX 1660 6GB | NVIDIA Geforce RTX >=6GB VRAM: 2060, 2070, 2080, 3060, 3070, 3080, 4050, 4060, 4070, 4080, 4090 |

| CPU | Intel i5 or AMD Ryzen 5 |

Intel i7 or AMD Ryzen 7 |

| RAM | 8GB | 16GB |

| HDD |

depending on the size of the implementation (at least 10GB of disk space for province-size terrainor 40GB for Poland) |

|

| SOFTWARE |

Windows 10 up-to-date graphics card drivers + CUDA Toolkit software + Microsoft Visual C++ Redistributable libraries |

|

What are the recommendations for the drone-mounted camera so that the detection can be properly performed?

In order for the detection to be as effective as possible and to perform the most accurate estimation of the coordinates of the indicated persons, the camera should enable the observation of the area in the nadir view, i.e. camera looking vertically down. We require that aerial imagery is saved in the JPG format. Camera parameters and flight altitude should be adjusted to ensure the ground sampling distance (GSD) ranging from 1.0 and 2.0 cm/px (optimally around 1.3 cm/px). In other words, it means that side of a square pixel in image corresponds to 1.0–2.0 cm in the field.

For example, the system works with cameras installed onboard DJI multirotors (platforms or cameras: Phantom 4 Pro/Advanced/Pro V2.0/RTK, Mavic Enterprise, Mavic 2 Enterprise Advanced, Mavic 2 Enterprise Dual, Mavic 2 Zoom, Mavic Air 2, Air 2S, Mavic Pro, Zenmuse Z30/X4S/H20/H20T/X5S/P1), Yuneec multirotors (cameras: E90, E90X), Autel multirotors (cameras: XT701, XT705, XT709) or fixed-wing eBee drone (camera: Canon PowerShot S110). Most cameras with a 20 Mpx sensor, when flying at the altitude of approx. 45 meters, provides a good photo resolution and GSD at a level below 1.5 cm/px.

What are the flight recommendations?

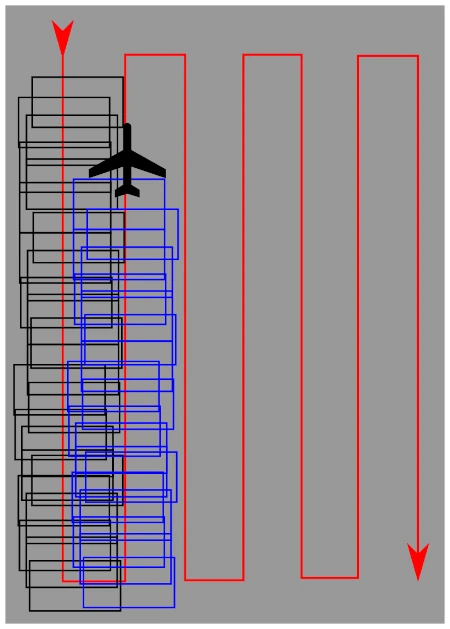

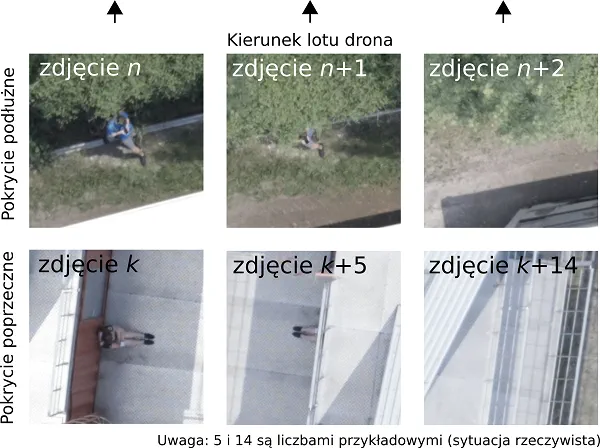

Aerial photos should be nadir images of terrain, the camera should point vertically downwards and the lens should be set vertically towards the terrain (nadir images). Acquisition of aerial photos should be carried out in accordance with the recommendations commonly accepted in close-range photogrammetry, i.e. side overlap should be around 60%, while front overlap should be set to approx. 80% (see figure below).

Such overlap values ensure that every point in the field is photographed in a few images, from various directions and at different angles (see figure below). As a result, a possibility of overlooking a person is limited. The considerable front and side overlap does not impact time of the analysis meaningfully.

In addition, flight altitude should be adjusted to parameters of the drone-mounted camera so that images are of high resolution, corresponding to low GSD values (see above).

In order to take photos of the highest sharpness we recommend that the drone travels during a photogrammetric flight at horizontal velocity not exceeding 2.5–3.0 m/s.

Is it possible to detect people from video recordings using the system?

No. Videos are not processed by the system. The system operates only with nadir aerial images taken in accordance with commonly accepted recommendations in close-range photogrammetry (side and front overlap at the level of 60/80%).

Does the system use infrared photos and videos?

No. Our detection algorithm works only on nadir RGB images. Tests with near infrared (NIR) imagery were performed, however, the detection results were found to be less satisfactory.

We also do not work on images acquired in far infrared (thermovision).

Does the system work at night?

Our detection algorithms operate on high-resolution RGB images captured in daylight, photos captured at night are associated with a certain limitation. Even if it is possible to perform night flights from a legal perspective, the photos obtained during such flights are often of poor quality for the detection to be effective.

What is the data processing output and how long does it take to obtain the results of the analysis?

Maps of the theoretical range of human movement as well as maps with automatically indicated locations of objects resembling people are created in real time on a fast laptop, operating completely offline, without access to the Internet.

The detection of people in the photos is possible after the unmanned aerial vehicle has landed and the images have been downloaded to the laptop on which the system is installed. For example, detection on approximately 100 aerial images along with full visualization is carried out in 2–3 minutes.

Does the system automatically generate the drone mission?

No. The terrain for the flight can be based on the recommendations obtained in the system, but the final decision on where the drone should fly rests on the people responsible for planning the search. They have the appropriate management experience and are responsible for the optimal allocation of resources in the field.

The responsibility for planning the drone route and performing the flight appropriately lies with the drone operator who uses the external flight planning software, independent of the SARUAV system, dedicated to specific unmanned aerial vehicles.

Is it possible for the system to work in real time?

The default operation of the detection module is as follows: the drone takes photos (the SARUAV system does not control the drone, and the flight is carried out by the operator), it lands, the memory card is removed and inserted to the laptop or the drone is connected to the laptop using a cable, downloading photos takes place and the detection begins. At the moment, the image processing takes place on a laptop in the field, at the search action site, completely offline, but according to the above-mentioned procedure (let us say it is near-real time, taking into account the duration of subsequent flights of one drone).

If the customer has a drone that transfers photos (in good quality) to the computer during the flight, we are quite easily able to adapt our application to work in this mode. However, this is not feasible in the current version.

Can the SARUAV system be adapted to detect animals, fire sources or other objects?

Such works were not carried out by us. The human detection system has been elaborated for several years and is being developed until now. During this period, both conceptual and programming works as well as field experiments were carried out. After such a long period of research, our detectors guarantee high efficiency in detecting people, but we cannot directly translate their potential into the identification of other objects.

SUPPORT

SARUAV Ltd. has been supported in frame of the programme “Wsparcie prawne dla startupów” of Polish Agency for Enterprise Development, finansed from the state budget by the Ministry of Economic Development and Technology of Poland (contract no.: UUW-POPP.01.01.00-00-0078/21-00; UUW-POPP.01.01.00-00-0135/22-00).